Data is the rocket fuel behind AI. But if your tank is half-empty– missing entire communities, cultural contexts, or lived experiences—your rocket doesn’t launch; it misfires. In the rush to automate everything, many AI-powered “insights” engines run not on representative datasets, but on whatever is easiest to scrape, buy, or recycle. That convenience creates cultural gaps with real consequences. Brands walk into campaigns believing they have proof, but what they really have is partial truth—data that reflects availability, not accuracy. Ignoring those gaps doesn’t make them benign. It makes them dangerous.

AI today is trained overwhelmingly on datasets that skew toward the most represented groups, languages, and lifestyles. Recent research shows vision-language models generate more homogeneous, stereotyped descriptions when interpreting images of darker-skinned Black individuals compared with white or lighter-skinned individuals. In generative imaging, the gaps are even more visible. A 2025 analysis found that popular AI image generators depicted white people with significantly higher accuracy than people of color, often mis-rendering skin tone or facial features entirely. Those aren’t glitches. They’re reflections of who’s over-represented in the data—and who isn’t.

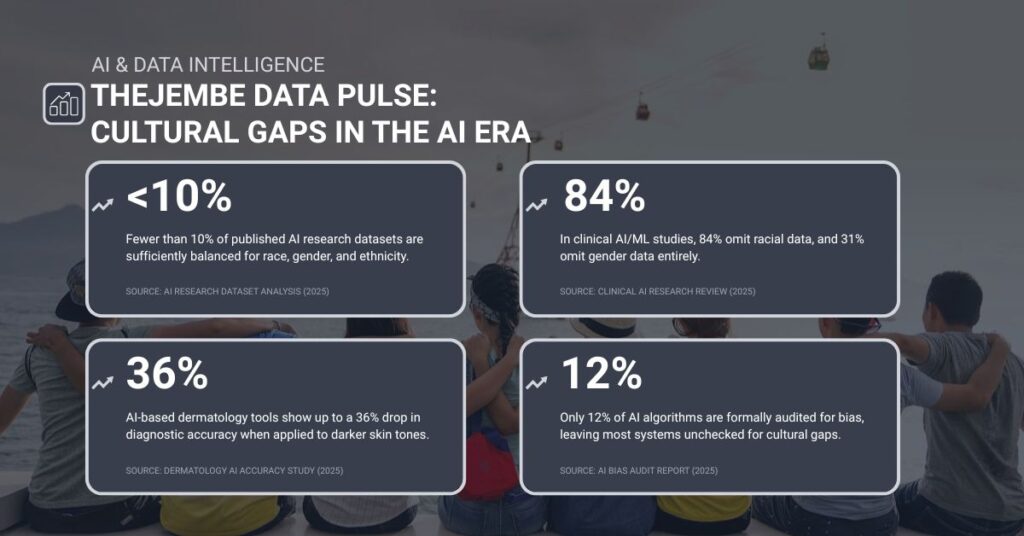

The problem is even more pronounced in healthcare, where lives depend on precision. A 2025 review found that 84% of clinical AI/ML studies did not disclose the racial composition of their data, and nearly one-third omitted gender data altogether. Another study documented a 27–36% drop in diagnostic accuracy for skin-disease AI models when applied to darker skin tones. When entire groups are missing from the ground truth, the algorithms built on that ground begin to fail them.

The danger is not just statistical. When datasets omit entire cultural groups, they also omit the lived realities, emotional drivers, linguistic nuance, and behavioral cues that make insights meaningful. Brands depend on AI to predict who will buy, who will churn, who will click, and who will convert. But if your underlying dataset reflects a narrow slice of the population, your predictions are nothing more than a mirror held up to the majority. AI can only reflect what it’s been fed. If the meal is biased, the output is biased.

This is how brands continue to “optimize” toward a mythical median consumer– white, English-speaking, urban, tech-comfortable, and predictable. Meanwhile, the audiences that actually shape culture—multicultural, multilingual, intersectional, increasingly global in influence—remain under-represented in the data that guides decisions. A dataset that looks comprehensive on a dashboard can still be culturally blank.

AI bias is often talked about as a technical problem, but it is produced by structural decisions: where data is sourced, whose experiences are considered “standard,” and which groups are easiest to reach. The imbalance shows up everywhere. A study analyzing a million hours of audio in generative-AI music training sets found only 14.6% represented music from the Global South. That kind of erasure happens across domains—imagery, voice tech, text corpora, recommendation engines, and market research. The models built on top of those datasets behave accordingly. They flatten culture instead of accurately reflecting it.

Brands continue to assume scale will save them. If you have a million survey responses, surely it must reflect everyone… right? But scale without representation is just quantity without truth. You get dense data and thin culture—millions of rows that tell you what’s easy to capture rather than what’s actually happening. Many companies never detect the blind spots because their KPIs aren’t built to reveal them. Algorithms performing “well” for the majority hide their failures with the very groups brands claim to want to reach.

The solution is not to abandon AI; it is to rebuild the foundation. Cultural fluency is the only scalable fairness. Without deliberately representative data, algorithmic intelligence will always fall short of real-world complexity. Brands that want accuracy need more than machine efficiency—they need methodological honesty. That means sourcing data intentionally, validating outputs with human cultural context, building mixed-method feedback loops, and treating missing data not as a minor flaw but as a critical risk signal.

Cultural intelligence isn’t a soft discipline. It’s data integrity.

In 2026 and beyond, cultural invisibility is the blind spot that will shape the next wave of brand missteps, public backlash, and misaligned strategy. And consumers will not be shy about calling it out, loudly, and often. The demographics driving cultural momentum—Black consumers, Hispanic consumers, Asian Americans, multicultural Gen Z, diaspora communities, LGBTQ+ communities—are precisely the ones most likely to be underrepresented in the datasets powering modern AI engines. If your models can’t see them, your strategies won’t reach them.

It is no longer enough for brands to “use AI.” They have to interrogate the data underneath it. They have to question who’s missing. Because the future of insight isn’t about bigger data—it’s about better data. And better data starts with representation that reflects the real world, not the convenient one.

Cultural fluency isn’t a decorative add-on. It’s the difference between thinking you know your audience and actually knowing them. And in an AI-driven world, that gap is only getting wider for the brands that don’t keep up.